Declare the grain of your dataĭeclaring the grain is the act of uniquely identifying a record in your data source. Identifying the correct business process is critical-getting this step wrong can impact the entire data mart (it can cause the grain to be duplicated and incorrect metrics on the final reports). The data (business process) needs to be integrated across various departments, in this case, marketing can access the sales data. Looking at our sample dataset mentioned earlier, we can clearly see the business process is the sales made for a given event.Ī common mistake made is using departments of a company as the business process.

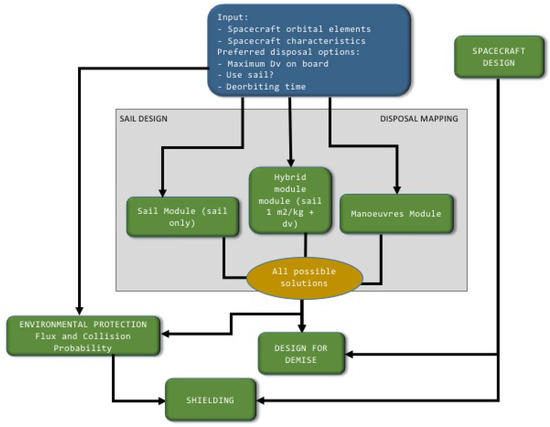

The business process is then persisted as a data mart in the form of dimensions and facts. This is a good starting point to identify various sources for a business process. Usually, companies have some sort of operational source system that generates their data in its raw format. In simple terms, identifying the business process is identifying a measurable event that generates data within an organization. Some degree of data warehousing knowledge will be helpful to understand the topics.An Amazon Simple Storage Service (Amazon S3) bucket where files that will be loaded into Amazon Redshift are stored.An Amazon Redshift cluster or an Amazon Redshift Serverless endpoint.Prerequisitesįor this walkthrough, you should have the following prerequisites: The following tables show examples of the data for ticket sales and venues.Īccording to the Kimball dimensional modeling methodology, there are four key steps in designing a dimensional model:Īdditionally, we add a fifth step for demonstration purposes, which is to report and analyze business events. For this post, we have narrowed down the dataset for simplicity and demonstration purposes. Lastly, we use Amazon QuickSight to gain insights on the modeled data in the form of a QuickSight dashboard.įor this solution, we use a sample dataset (normalized) provided by Amazon Redshift for event ticket sales. We schedule the loading of the dimensions and facts using the Amazon Redshift Query Editor V2. Data is loaded and staged using the COPY command, the data in the dimensions is loaded using the MERGE statement, and facts will be joined to the dimensions where insights are derived from. After that, we create a data mart using Amazon Redshift with a dimensional data model including dimension and fact tables. In the following sections, we first discuss and demonstrate the key aspects of the dimensional model. The following diagram illustrates the solution architecture.

#REDSHIFT SPACE BY DB HOW TO#

Overall, the post will give you a clear understanding of how to use dimensional modeling in Amazon Redshift. We show how to perform extract, transform, and load (ELT), an integration process focused on getting the raw data from a data lake into a staging layer to perform the modeling. In this post, we discuss how to implement a dimensional model, specifically the Kimball methodology. We discuss implementing dimensions and facts within Amazon Redshift. Amazon Redshift provides built-in features to accelerate the process of modeling, orchestrating, and reporting from a dimensional model. You can structure your data, measure business processes, and get valuable insights quickly can be done by using a dimensional model. Amazon Redshift is a fully managed and petabyte-scale cloud data warehouse that is used by tens of thousands of customers to process exabytes of data every day to power their analytics workload.

0 kommentar(er)

0 kommentar(er)